Guide for a Gitlab job and KIND

The gitlab job executed by a docker container that needs docker to execute kind.

I recently had some trouble trying to execute KIND in a GitlabCI pipeline. It's been hard enough, that I thought a guide would be useful for other people too. Let's go step by step.

Configure Docker in docker in gitlab

As the name Kind says, it's Kubernetes in docker, which means you need the runner of the pipeline to be able to execute docker commands. First of all, I am using a shared runner and a docker executor.

That means that we have to deal with docker in docker, there's some documentation in GitLab on how to achieve that on the part on use docker to build the docker images page. The key concept from there that you need to take is docker commands are being executed through the network. In the same way in your localhost, if you want to use docker in docker, you might mount a volume on the docker.sock to use the "parent" docker daemon from a docker container, GitLab runners are "mounting" docker through the network (you can echo $DOCKER_HOST in the pipeline and you should see something like that tcp://docker:2376).

Configuring a job to be executed in docker that executed docker commands, docker in docker, is not that difficult actually. You just need to use an image that has docker installed and add this:

services:

- name: public.ecr.aws/docker/library/docker:23.0.6-dind

alias: docker

so we have something like this:

kind-job:

image: public.ecr.aws/docker/library/docker:24.0.5-dind-alpine3.18

services:

- name: public.ecr.aws/docker/library/docker:23.0.6-dind

alias: docker

Please don't mind that the versions of dockers don't match, doesn't really matter.

KIND in the runner

The next step is to install kind in the executor. Basically, copying from this example you can those lines in the script job:

kind-job:

image: public.ecr.aws/docker/library/docker:24.0.5-dind-alpine3.18

services:

- name: public.ecr.aws/docker/library/docker:23.0.6-dind

alias: docker

variables:

KUBECTL: v1.27.4

KIND: v0.20.0

before_script:

- apk add -U wget

- wget -O /usr/local/bin/kind https://github.com/kubernetes-sigs/kind/releases/download/${KIND}/kind-linux-amd64

- chmod +x /usr/local/bin/kind

- wget -O /usr/local/bin/kubectl https://storage.googleapis.com/kubernetes-release/release/${KUBECTL}/bin/linux/amd64/kubectl

- chmod +x /usr/local/bin/kubectl

That installs KIND in the docker executor.

Alternatively, and I like it more, I'd rather use my own image with that as the docker executor. The Dockerfile of that image might look like this:

ARG DOCKER_VERSION=24.0.5

ARG OS_VERSION=alpine3.18

FROM public.ecr.aws/docker/library/docker:${DOCKER_VERSION}-${OS_VERSION}

#curl -L -s https://dl.k8s.io/release/stable.txt) to know the latest.

ARG KUBECTL_LATEST_STABLE=v1.27.4

ARG KIND_VERSION=v0.20.0

RUN apk add -U wget

RUN wget -O /usr/local/bin/kind https://github.com/kubernetes-sigs/kind/releases/download/${KIND_VERSION}/kind-linux-amd64

RUN chmod +x /usr/local/bin/kind

RUN wget -O /usr/local/bin/kubectl https://storage.googleapis.com/kubernetes-release/release/${KUBECTL_LATEST_STABLE}/bin/linux/amd64/kubectl

RUN chmod +x /usr/local/bin/kubectl

Well, either way, is the same. Now with that, we can add in our script the kind create cluster --name kind-cluster and that will work, but you will have lots of trouble accessing this cluster.

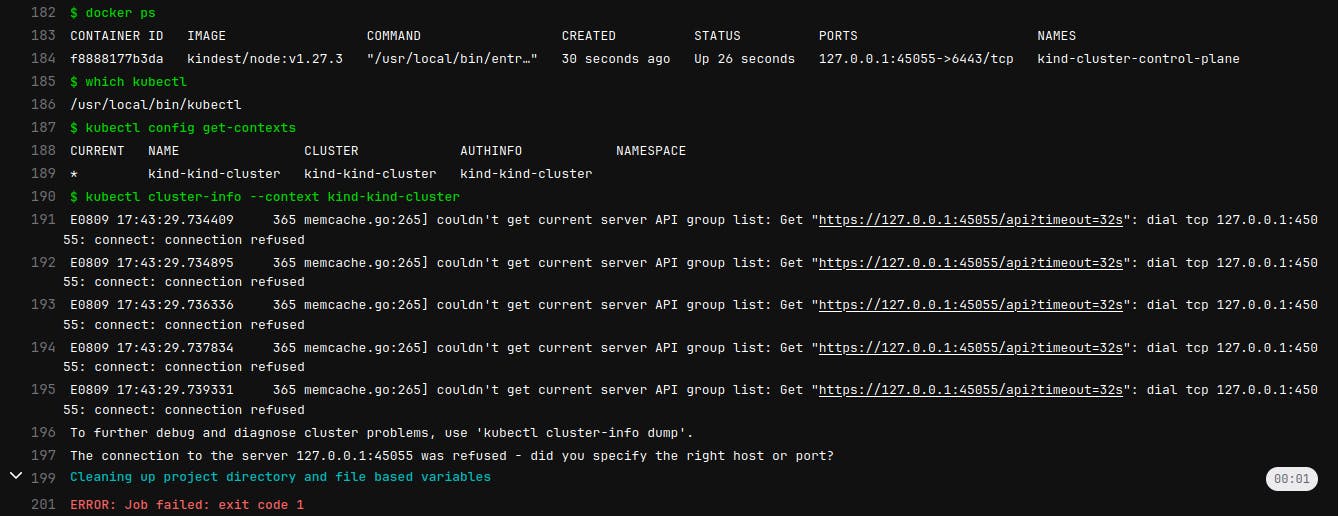

Problem #1 KIND network

If you try to execute any kubectl command you will get an output similar to that:

Let me write that so it can be indexed :) couldn't get current server API group list: Get "https://127.0.0.1:45055/api?timeout=32s": dial tcp 127.0.0.1:45055: connect: connection refused

The connection to the server 127.0.0.1:45055 was refused - did you specify the right host or port?

That's the error one sees when the cluster is not created yet. Where the hell is this cluster?

This is more or less what's happening now. The cluster is being created not in the proper place, I honestly have no idea why it doesn't break because I believe the cluster is being created somehow in "localhost" inside the docker, but then any kubectl command will complain that no cluster is found.

To solve this, we need to add some configuration in kind, create a file called pipeline-kind-config.yaml for instance, and add this:

apiVersion: kind.x-k8s.io/v1alpha4

kind: Cluster

networking:

apiServerAddress: 0.0.0.0

Here is the source for that, but do NOT add the rest just yet. Let's solve the problems one by one.

Change the pipeline to execute the kind create a cluster with this config:

kind-job:

image: public.ecr.aws/docker/library/docker:24.0.5-dind-alpine3.18

services:

- name: public.ecr.aws/docker/library/docker:23.0.6-dind

alias: docker

variables:

KUBECTL: v1.27.4

KIND: v0.20.0

before_script:

- apk add -U wget

- wget -O /usr/local/bin/kind https://github.com/kubernetes-sigs/kind/releases/download/${KIND}/kind-linux-amd64

- chmod +x /usr/local/bin/kind

- wget -O /usr/local/bin/kubectl https://storage.googleapis.com/kubernetes-release/release/${KUBECTL}/bin/linux/amd64/kubectl

- chmod +x /usr/local/bin/kubectl

script:

- kind create cluster --name kind-cluster --config=pipeline-kind-config.yaml

The above will create the cluster, I believe, using the actual docker in docker, so going through that network place, where then, executing kubectl commands will be found.

Problem #2 Kubectl network

But what's happening now? A similar error, now kubectl is not trying to connect to localhost:randomPort, but to 0.0.0.0:randomPort, well that kind of is a step forward, but still, the cluster is not there.

Well, creating a cluster, the file $HOME/.kube/config is created, and it has something like this

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: base64certificate

server: https://0.0.0.0:38079

name: kind-cluster

The server is gotten from the kind config, that's why it's 0.0.0.0. We need to change that to "docker". Why "docker"? Because it is the alias we put at the docker inside docker (I guess kubectl is using docker commands under the hood).

kind-job:

image: public.ecr.aws/docker/library/docker:24.0.5-dind-alpine3.18

services:

- name: public.ecr.aws/docker/library/docker:23.0.6-dind

alias: docker #<-----because of that

So let's change that file using the sed instruction, you've seen it probably in the link above and you might be wondering why I wasn't copying it, well now it's the time.

This is the complete job:

kind-job:

image: public.ecr.aws/docker/library/docker:24.0.5-dind-alpine3.18

services:

- name: public.ecr.aws/docker/library/docker:23.0.6-dind

alias: docker

variables:

KUBECTL: v1.27.4

KIND: v0.20.0

before_script:

- apk add -U wget

- wget -O /usr/local/bin/kind https://github.com/kubernetes-sigs/kind/releases/download/${KIND}/kind-linux-amd64

- chmod +x /usr/local/bin/kind

- wget -O /usr/local/bin/kubectl https://storage.googleapis.com/kubernetes-release/release/${KUBECTL}/bin/linux/amd64/kubectl

- chmod +x /usr/local/bin/kubectl

script:

- kind create cluster --name kind-cluster --config=pipeline-kind-config.yaml

- sed -i -E -e "s/localhost|0\.0\.0\.0/docker/g" "$HOME/.kube/config"

What's not working?

I tried to use "docker" with the kind config file like this:

apiVersion: kind.x-k8s.io/v1alpha4

kind: Cluster

networking:

apiServerAddress: docker

But that doesn't work:

I guess that for the cluster creation in KIND, somehow you need to use 0.0.0.0. I don't have the knowledge to explain why is that, why I see that it tried to assign the port 0, while in the other case, the port is really something random. So maybe this way can work but still misses some extra configuration unknown to me.

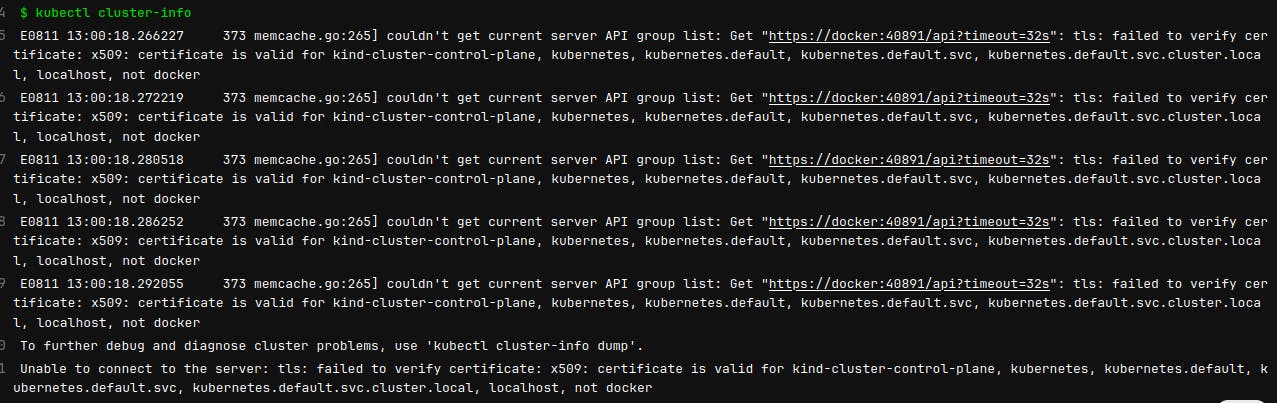

Problem #3 Certificates

Couldn't get current server API group list: Get "https://docker:40891/api?timeout=32s": tls: failed to verify certificate: x509: certificate is valid for kind-cluster-control-plane, kubernetes, kubernetes.default, kubernetes.default.svc, kubernetes.default.svc.cluster.local, localhost, not docker

Alternative solution

In an attempt to solve that, I changed the service alias in the pipeline from docker -> kubernetes, after all, is one of the names that the certificate validates. In that case, you need to change that in the SED like this: sed -i -E -e "s/localhost|0\.0\.0\.0/kubernetes/g" "$HOME/.kube/config". But then you have a similar error, but not on the kubectl commands, but on any docker-based command (if you do for example docker ps like I had just to debug) saying that the other certificate is not valid for the word Kubernetes, only other names, among them localhost!

So you can change the alias in the Gitlab service to "localhost", and the sed command to write "localhost", and it all works all right, but keep reading for a better solution.

Almost there. It seems we need to add some SAN to the certificate. Here there's a stack overflow question that gives valuable knowledge on how those certificates work in Kubernetes.

If we were using just plain Kubernetes, we would use this command kubeadm and give either give it a configuration file like this:

apiServer:

certSANs:

- "docker"

Or we could use the kubeadm flags, there's one that does exactly what we need: --apiserver-cert-extra-sans strings (reference).

But how to do that in KIND?

We've come across some code that seems to solve exactly that problem right? Like [here](https://github.com/kind-ci/examples/blob/master/gitlab/kind-config.yaml) they do this:

# add to the apiServer certSANs the name of the docker (dind) service in order to be able to reach the cluster through it

kubeadmConfigPatchesJSON6902:

- group: kubeadm.k8s.io

version: v1beta2

kind: ClusterConfiguration

patch: |

- op: add

path: /apiServer/certSANs/-

value: docker

it really looks like this is the solution. Is it? Well, no luck with that. Actually, if you're lucky enough to mess with the kind configuration file - better try on local, more on that at the bottom- you will see some errors spitted out, and there's the suspicious warning that it's ignoring a YAML, and I believe it's the resulting yaml of the above configuration.

Ok so if it doesn't work, what does? Let's read the KIND documentation on Kubeadm config patches. It says:

Formally KIND runs kubeadm init on the first control-plane node, we can customize the flags by using kubeadm InitConfiguration.

Well, that was a little bit misleading for me, because I thought I could somehow pass the flag --apiserver-cert-extra-sans flag somehow and problem solved. But if you see the example, it doesn't look like is talking about this way of adding flags. It also has a link to this page and there's an extract like this:

apiServer:

extraArgs:

authorization-mode: "Node,RBAC"

extraVolumes:

- name: "some-volume"

hostPath: "/etc/some-path"

mountPath: "/etc/some-pod-path"

readOnly: false

pathType: File

certSANs:

- "10.100.1.1"

- "ec2-10-100-0-1.compute-1.amazonaws.com"

timeoutForControlPlane: 4m0s

ok, that looks similar to the example in the KIND documentation, and also very similar to a config file that you would pass to kubeadm.

By the way, indeed, KIND always uses kubeadm init with the --config option with a file (and some other options that you can see whenever creating a KIND cluster breaks).

Well translating this into my actual problem, I have a KIND configuration file like this:

apiVersion: kind.x-k8s.io/v1alpha4

kind: Cluster

networking:

apiServerAddress: 0.0.0.0

kubeadmConfigPatches:

- |

kind: ClusterConfiguration

apiServer:

certSANs:

- "docker"

and finally! that adds the SAN name in the certificate and everything works just fine. You can check that the SAN name is added by adding those two lines in the Gitlab script:

- docker cp kind-cluster-control-plane:/etc/kubernetes/pki/apiserver.crt .

- openssl x509 -text -noout -in apiserver.crt

just make sure the name of the docker that KIND created is that one and check the output, you should see the extra SAN names.

TL;DR; Final Configuration

To make it work you need a KIDN configuration file called for example pipeline-kind-config.yaml like this:

apiVersion: kind.x-k8s.io/v1alpha4

kind: Cluster

networking:

apiServerAddress: 0.0.0.0

kubeadmConfigPatches:

- |

kind: ClusterConfiguration

apiServer:

certSANs:

- "docker"

And the job in Gitlab should look like this:

kind-job:

image: public.ecr.aws/docker/library/docker:24.0.5-dind-alpine3.18

services:

- name: public.ecr.aws/docker/library/docker:23.0.6-dind

alias: docker

variables:

KUBECTL: v1.27.4

KIND: v0.20.0

before_script:

- apk add -U wget

- wget -O /usr/local/bin/kind https://github.com/kubernetes-sigs/kind/releases/download/${KIND}/kind-linux-amd64

- chmod +x /usr/local/bin/kind

- wget -O /usr/local/bin/kubectl https://storage.googleapis.com/kubernetes-release/release/${KUBECTL}/bin/linux/amd64/kubectl

- chmod +x /usr/local/bin/kubectl

#alternatively, instead of this before script, put that in an image and use this one in the image:

script:

- kind create cluster --name kind-cluster --config=pipeline-kind-config.yaml

- sed -i -E -e "s/localhost|0\.0\.0\.0/docker/g" "$HOME/.kube/config"

- kubectl apply -f deployment.yaml

- [...]

I hope this helps people.

Problems in local

To troubleshoot that in a faster way than pushing my changes to the pipeline all the time, I debugged in my localhost and I had a couple of problems that I think didn't have an obvious solution.

First, if you forget to comment out this part:

networking:

apiServerAddress: 0.0.0.0

you will basically block the creation of any cluster since there will be no place available.

Second, try to use different names for clusters. If you create a cluster-1 with a faulty configuration and it breaks, even if you delete all traces of it, trying to create again the cluster with the same name cluster-1 might give you the exact same error, even though this time you have a perfectly fine configuration. That stole from me some hours!